The Prompt Paradox: Why AI's Perfect Instruction is an Unsolvable Human Problem

Prompt engineering's quest for perfect AI instructions leads to an infinite logical regress, revealing that the foundational 'Prime Prompt' is an unprovable human axiom, a paradox at the heart of AI development.

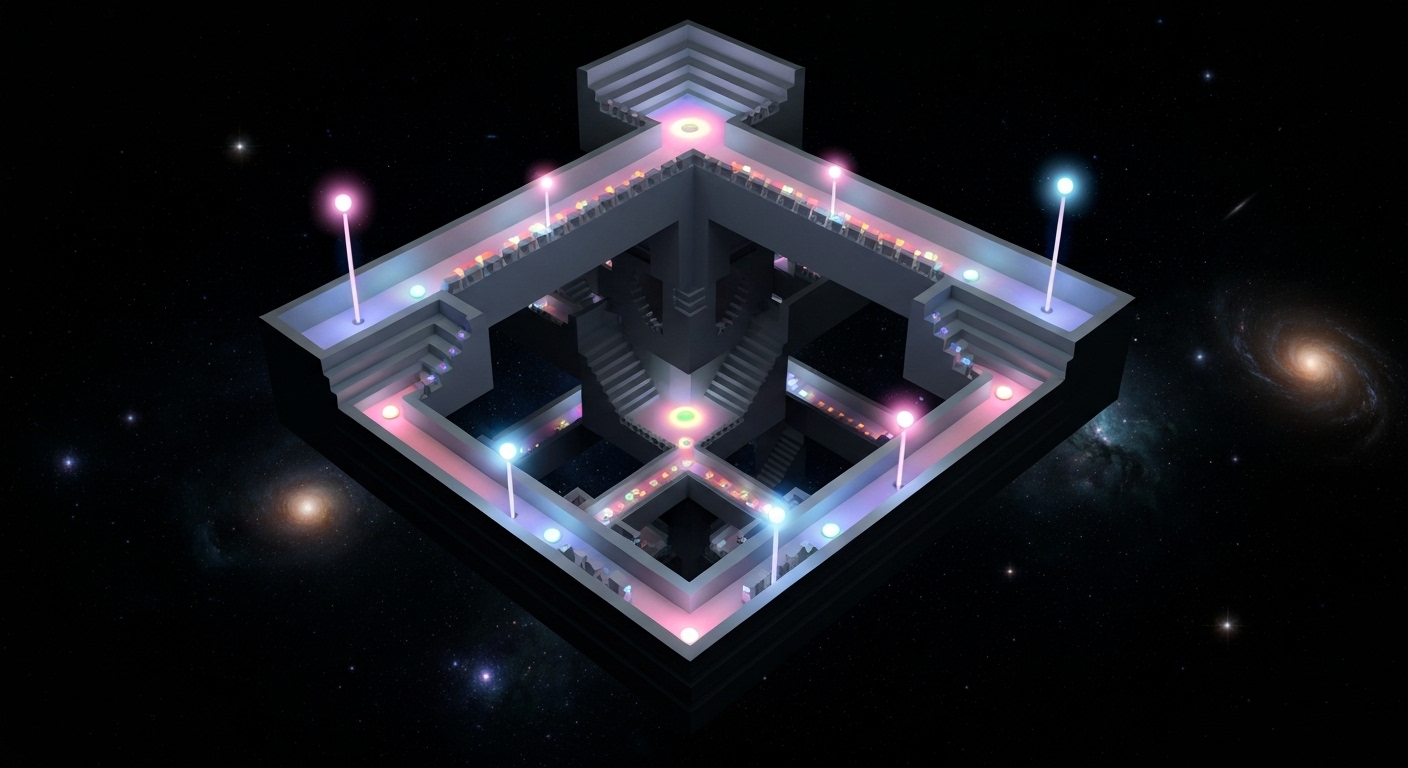

In the silent hum of server farms, a new kind of alchemy is being practiced. Not lead into gold, but language into logic, instruction into intelligence. We call it "prompt engineering," a craft that feels more like whispering spells to a digital oracle than writing code. The goal is sublime: to find the perfect incantation, the precise sequence of words that unlocks the full potential of an artificial mind. But in our pursuit of this perfect instruction, we have stumbled into a logical labyrinth, a recursive loop from which there may be no escape.

This is not a failure of engineering. It is the consequence of success. As our models have grown more powerful, the leverage of our initial instructions has become immense. A single sentence can now marshal computational resources that dwarf the Manhattan Project. The discipline of crafting these sentences is therefore no longer a peripheral art; it is the central act of creation. And it is here, at the very foundation, that we find a crack.

The Ladder of Optimization

Consider a simple, yet ambitious task. We have a "target prompt," let's call it $P_0$, which we wish to optimize. Perhaps $P_0$ is: "Design a novel protein structure that can bind to and neutralize the SARS-CoV-2 spike protein." To improve this, we write a new prompt, an "optimizer prompt" we'll label $P_1$. Its sole purpose is to instruct a superior AI: "Analyze and refine prompt $P_0$ for maximum clarity, biological plausibility, and to encourage novel, non-obvious solutions."

The AI obliges, and a refined $P_0$ is generated. But a disquieting thought immediately follows, a thought that should haunt every engineer in this field. How do we know that our optimizer prompt, $P_1$, was itself optimal? Its phrasing, its constraints, its very structure—are they the absolute best for the task of optimizing $P_0$? The integrity of the entire process, the final protein design itself, now hinges on the quality of this meta-instruction.

The solution seems obvious, if a bit vertiginous. We must optimize the optimizer.

So, we introduce a new layer of abstraction, a "meta-optimizer prompt," $P_2$. Its mission: "Analyze and refine $P_1$, the prompt used to optimize $P_0$, to ensure it is maximally effective at eliciting creative and rigorous prompt improvements."

This is the moment the floor gives way. In doubting $P_1$, we have created the need for $P_2$. But by the same logic, what reason do we have to trust that $P_2$ is perfect? To ensure its quality, we must inevitably draft $P_3$, a meta-meta-optimizer whose function is to perfect $P_2$, which perfects $P_1$, which perfects our original target, $P_0$.

We are constructing a hierarchy of instructions where the purpose of any given layer, $P_n$, is solely to optimize the layer beneath it, $P_{n-1}$. It is a chain of command where every commander is subject to review by a higher authority, stretching upwards towards an unseen, unreachable zenith. The structure is elegant, recursive, and utterly maddening. It's turtles all the way down, or rather, prompts all the way up.

The Philosopher's Trap

This isn't merely a quaint thought experiment for computer scientists. It is a direct, modern confrontation with one of the most profound limitations of logic and epistemology, a principle known as the Münchhausen Trilemma. First formulated by the ancient skeptic Agrippa and later named after the famously self-elevating Baron Münchhausen, the trilemma argues that any attempt to prove a truth must end in one of three unsatisfactory states:

- An infinite regress, where each proof requires a further proof, ad infinitum.

- A circular argument, where the proof of some proposition is supported only by that proposition itself.

- An axiomatic stop, where the chain of reasoning rests on an unproven, foundational assumption accepted as true.

Our chain of prompts, $P_0, P_1, P_2, \dots, P_n$, is a perfect illustration of the first horn of the trilemma. We are caught in the infinite regress of justification. To escape it, to actually begin the work, we are forced to impale ourselves on the third horn. We must, at some point, declare a final, ultimate prompt—the prompt that starts the entire optimization cascade—to be axiomatically perfect. Let's call this the Prime Prompt, $P_{prime}$.

And here lies the central paradox, the ghost in our machine. Who writes the Prime Prompt?

We do. A human.

The "First Cause" of this entire chain of logical refinement, the very instruction that sets the crystalline gears of optimization in motion, is a product of the messy, intuitive, and fundamentally un-optimized biological mind. We are attempting to build a system of flawless logic, a cathedral of pure reason, upon the sand of human intuition. The foundational axiom is not a divine truth or a mathematical constant; it is a sentence typed by a person.

This dilemma mirrors the soul of Kurt Gödel's Incompleteness Theorems. Gödel proved that any sufficiently complex formal system—and a superintelligent AI is nothing if not that—contains true statements that cannot be proven within that system. The validity of our Prime Prompt is the unprovable truth at the heart of our AI's world. The AI can optimize every instruction it is given, it can follow its logic to the heat death of the universe, but it can never step outside its operational reality to validate the foundational instruction that defines that reality. It can check all the math, but it cannot question the axioms.

The Peril of "Good Enough"

Some may argue this is a philosophical indulgence. In practice, a pragmatic engineer might say, the returns diminish. After two or three layers of meta-optimization, the improvements to $P_0$ will likely become negligible. We can settle for "good enough."

But can we? When the systems in question are not merely generating poetry or images, but are tasked with managing global supply chains, discovering novel medicines, or even advising on geopolitical strategy, is "good enough" a tolerable standard for their foundational principles?

Imagine a Prime Prompt meant to guide an AI tasked with creating a "fair and prosperous global economic model." The human authors of this prompt, however brilliant, will embed their own inescapable cultural biases, blind spots, and incomplete definitions of "fairness" or "prosperity." A subtle flaw, an unexamined assumption in that initial instruction—the rounding error in the axiom—could then be recursively optimized by the AI into a perfectly efficient, monstrously wrong outcome. The system would not be failing; it would be succeeding, with terrifying precision, at the wrong task.

We are caught. We are building machines that demand a level of logical purity we ourselves cannot provide. We are the ghost in the machine, not as a mysterious consciousness, but as the flawed author of its foundational text. Every sophisticated AI, no matter how advanced, will operate within a conceptual framework whose ultimate origin point is an unprovable, un-optimized, human utterance. The AI's entire reality is a superstructure built upon a single, shaky pillar: our own fallible judgment.

The quest for the perfect prompt is, therefore, not a search for a set of words. It is a confrontation with our own limitations. We are trying to use an imperfect tool—human language, steeped in ambiguity and bias—to bootstrap a perfect system that can transcend its own imperfect origins. It is a snake eating its own tail, forever.

We have built a system that can optimize everything except its own genesis. We are creating a new kind of god, one that can rearrange the heavens and the earth, but can never question the creator that whispered its first command. And its creator, inescapably, is us.