The Physics and Anatomy of Speech: How Vowels and Consonants Forge Human Communication

Explore the intricate physics, anatomy, and information theory behind the universal vowel-consonant structure of human language, revealing it as a masterful engineering compromise essential to our species.

We perform the miracle casually, thousands of times a day. With a puff of air and the subtle dance of muscle, we give form to thought, transforming the silent electricity of our minds into the public reality of sound. The act of speaking feels so innate, so effortless, that we seldom pause to consider the architecture beneath it. Yet, this architecture, the universal duality of vowels and consonants, is arguably the most critical technology our species has ever mastered. It is a system so successful that it has been independently implemented in every one of the thousands of languages spoken on Earth today.

This universality should give us pause. As skeptics, we are trained to be wary of "just-so" stories, those tidy evolutionary narratives that explain complex traits as perfect adaptations designed for a specific purpose. Is the vowel-consonant structure truly the optimal solution for vocal communication, an inevitable outcome of physics and biology? Or is it merely a historical accident, a system that worked well enough and became locked in by evolutionary chance?

The answer, I will argue, lies not in grand, untestable narratives about our ancestors' first words, but in a rigorous deconstruction of the act of speech itself. By examining language through the uncompromising lenses of physics, anatomy, and information theory, we can begin to see the vowel-consonant system for what it is: not an accident, but a sublime engineering compromise, forged over millions of years at the unforgiving interface of energy and information. It is a solution so elegant that if we were to design a biological communication system from scratch, we would likely arrive at something remarkably similar. To understand why, we must first abandon the familiar comfort of words and descend into the raw mechanics of the sounds that build them.

Part I: The Physics of a Phoneme

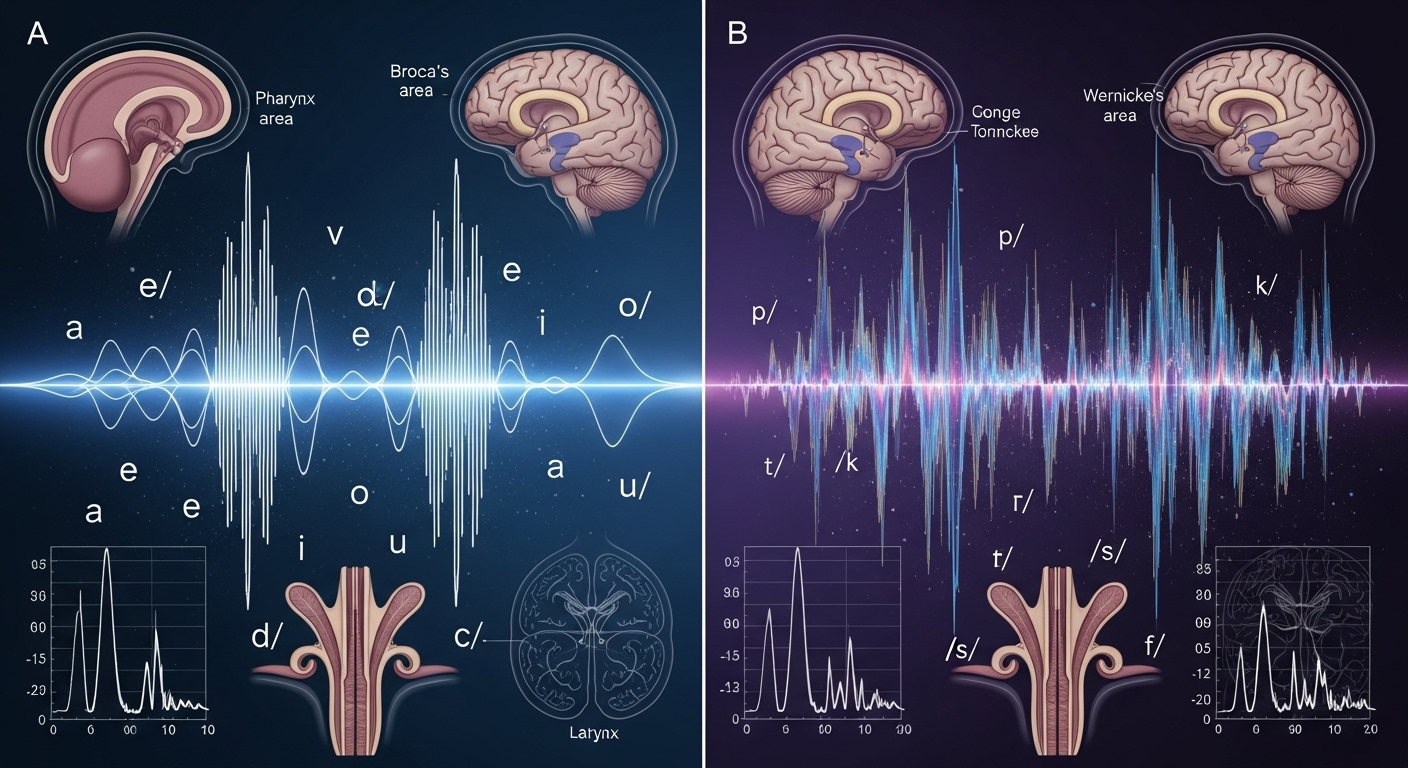

Speech, at its most basic level, is the controlled manipulation of sound waves. To understand its structure, we must first understand its raw material. The dominant model for this is the "source-filter theory," a beautifully simple concept that treats the production of voiced sound as a two-stage process.

The first stage is the source. Deep in our larynx, the vocal folds (or cords) act as a biological oscillator. Air pushed from the lungs forces them apart, and a combination of their own elasticity and aerodynamic effects (the Bernoulli principle) snaps them shut again. This rapid, rhythmic opening and closing—hundreds of times per second—chops the steady stream of air into a series of discrete puffs. The resulting sound wave is not a clean, simple sine wave like a tuning fork's. Rather, it is a complex, buzzing waveform known as a glottal pulse train.

From a physicist’s perspective, this buzz is a treasure trove of potential. A Fourier analysis reveals that it consists of a fundamental frequency ($f_0$), which we perceive as the pitch of the voice, plus a rich spectrum of harmonics—overtones at integer multiples of the fundamental frequency ($2f_0$, $3f_0$, $4f_0$, and so on). This harmonic richness is the raw clay from which speech will be sculpted. Without it, the voice would be a monotonous hum, incapable of forming distinct sounds.

The second stage is the filter. The raw buzz from the larynx travels up through the vocal tract—the pharynx, the oral cavity, and sometimes the nasal cavity. This entire passage, from larynx to lips, acts as a resonating chamber. Any tube of a specific size and shape has natural resonant frequencies at which it will vibrate most readily. Think of blowing across the top of a bottle: a small bottle produces a high-pitched note, a large one a low-pitched note. The vocal tract is an infinitely more complex and malleable version of this bottle.

When the harmonically rich buzz from the larynx passes through the vocal tract, the tract acts as a filter. It amplifies the source harmonics that fall near its natural resonant frequencies and dampens those that do not. These peaks of amplified acoustic energy are known as formants. It is the specific pattern of these formants—their frequency and relationship to one another—that is the defining acoustic signature of a vowel.

When you produce the vowel /i/ (as in "see"), you raise the front of your tongue high in your mouth, creating a small resonating cavity in the front and a large one in the back. This specific L-shaped configuration produces a low first formant (F1) and a very high second formant (F2). When you shift to the vowel /ɑ/ (as in "father"), you lower your tongue and jaw, creating a more uniform tube shape. This results in a higher F1 and a lower F2. Your brain, a masterful pattern-recognition machine, decodes these shifting formant patterns as distinct vowels with breathtaking speed and accuracy.

This is a critical point: vowels are not arbitrary sounds. They are the acoustic consequences of sculpting our vocal tract into different shapes. The classic vowels /i/, /ɑ/, and /u/ represent the acoustic vertices of human phonetics, corresponding to the most extreme configurations our articulators can achieve. Their acoustic distinctiveness is not a matter of cultural convention but a direct result of the physics of resonance.

But what of consonants? If vowels are the product of harmonic sculpting, consonants are the product of controlled disruption. They are created by introducing a significant constriction or complete closure somewhere in the vocal tract, fundamentally altering the nature of the sound. Instead of the periodic, harmonic wave of a vowel, consonants are primarily aperiodic—noisy and chaotic.

Consider the fricative /s/. Air is forced through a narrow channel between the tongue and the alveolar ridge, creating turbulence. This turbulence generates a chaotic, high-frequency hiss, a sound akin to white noise. A plosive, like /t/, is even more dynamic. It involves a three-part sequence: a period of complete silence as the tongue blocks the airflow and pressure builds up behind it; a sudden, transient burst of broadband noise as the closure is released; and often a puff of aspiration.

Herein lies the fundamental physical dichotomy of speech: the periodic, resonant, high-energy hums of vowels versus the aperiodic, noisy, low-energy hisses and pops of consonants. This is not a linguistic classification; it is a physical reality. One is a state of stable resonance; the other is a state of controlled disruption. The entire edifice of spoken language is built upon the systematic alternation between these two physical states.

Of course, the skeptic in us should recognize that this neat model is a simplification. In reality, speech is a continuous, flowing stream. The acoustic properties of a consonant are smeared into the following vowel in what linguists call "formant transitions." The phoneme, as a discrete, indivisible unit, is largely a cognitive abstraction imposed by our brain to make sense of this messy signal. But it is an abstraction that works, precisely because it maps so well onto the underlying physical states of production.

Part II: The Anatomical Gambit

The elegant physics of the source-filter model is only half the story. It describes how our vocal tract works, but not why we possess such a peculiar and dangerous instrument in the first place. The answer requires us to delve into the fossil record and confront one of evolution's most curious trade-offs.

The key anatomical feature that enables the modern human range of vowels is our descended larynx. In most mammals, including our closest primate relatives, the larynx sits high in the throat, allowing them to breathe and swallow at the same time—a handy trick for avoiding choking. In adult humans, the larynx has migrated significantly lower. This repositioning, combined with a flexion of the base of the skull, created the unique L-shaped vocal tract, with a vertical pharyngeal cavity and a horizontal oral cavity of roughly equal length.

This anatomy is a bio-acoustic marvel. It allows the tongue, now endowed with a deep root in the pharynx, to move both vertically and horizontally, independently modulating the size and shape of the two resonating cavities. This is what unlocks the full vowel space, particularly the acoustically distinct "point vowels" /i/, /ɑ/, and /u/. A chimpanzee, with its high larynx and single-tube vocal tract, simply cannot produce this range of sounds.

But this gift came at a terrible price. A low larynx means the paths for air and food cross deep in our throat, making us uniquely susceptible to choking to death. From a purely survivalist perspective, this is a catastrophic design flaw. For such a dangerous trait to have been selected for, the evolutionary advantage it conferred must have been immense.

The traditional "just-so" story is that the larynx descended for the purpose of speech. A skeptic, however, must ask if this is the only, or even the most likely, explanation. Perhaps speech was an exaptation—a feature that evolved for one reason but was later co-opted for its current function. Some researchers have proposed alternative drivers for the descended larynx, such as its role in creating the illusion of larger body size for intimidating rivals or attracting mates. It is also possible that the anatomical shifts were a secondary consequence of our transition to bipedalism and the associated changes in skull architecture.

In this more cautious view, our ancestors did not evolve a descended larynx in order to speak. Rather, once this anatomical arrangement was in place for other reasons, it proved to be a pre-adaptation of monumental importance. It turned our vocal tract into a high-fidelity sound synthesizer, and the stage was set for the evolution of the complex neural software needed to control it.

That software is distributed throughout the brain. The classic textbook model points to Broca's area for production and Wernicke's area for comprehension. But this is a gross oversimplification. Modern neuroimaging reveals that language is a whole-brain activity. The fine, rapid-fire motor control required to articulate a single sentence involves vast swathes of the motor cortex. The cerebellum, long thought to be involved only in posture and balance, is now understood to be critical for the precise timing of syllables. And the whole network is bound together by massive white matter tracts, like the arcuate fasciculus.

Genetic evidence, while often sensationalized, provides another piece of the puzzle. The gene FOXP2, for instance, is not "the language gene." It is a regulatory gene, a high-level manager that influences the expression of many other genes. Its modern human variant, which we appear to share with Neanderthals, is crucial for the development of the neural circuits that underpin fine-grained sequential motor control—exactly the kind of control needed for both complex tool use and articulation. Individuals with a mutated form of the gene have severe difficulties with grammar and the coordination of oro-facial movements. FOXP2 did not create language, but it appears to be a necessary component of the genetic toolkit that allows a brain to acquire and execute it.

Finally, an instrument is useless without a finely tuned receiver. Our auditory system did not evolve in a vacuum; it co-evolved with our vocal apparatus. The cochlea in our inner ear acts as a biological Fourier analyzer, separating complex sound waves into their constituent frequencies. The auditory cortex is then tasked with pattern recognition. One of its most remarkable feats is categorical perception. When listening to a sound that is acoustically halfway between a /b/ and a /p/, we do not hear an ambiguous blend. Our brain forces a choice; we hear either a clear /b/ or a clear /p/. This neurological shortcut transforms a messy, continuous acoustic world into the neat, discrete phonemic categories that language relies on. It simplifies the decoding process, allowing for faster and more reliable comprehension.

Part III: The Information Imperative

We now have the physical components (a source and filter) and the biological hardware (a specialized anatomy, brain, and auditory system). But what principle governs the structure of the code that runs on this hardware? For this, we must turn to one of the 20th century's most profound scientific achievements: Claude Shannon's Information Theory.

At its heart, language is a communication system, and its fundamental purpose is to transmit information reliably across a noisy channel. Shannon's work provides the mathematical tools to analyze how any such system works. The central tension is between efficiency and redundancy. An efficient code packs a lot of information into a small signal, but it is vulnerable to corruption by noise. A redundant code is less efficient but more robust.

The vowel-consonant duality is a masterful solution to this engineering problem. It elegantly resolves a fundamental trade-off between energy and information.

Vowels are the high-energy, low-information carriers. As we've seen, they are periodic, resonant sounds that contain most of the acoustic power of speech. They are physically robust, capable of traveling long distances and punching through background noise. From an informational standpoint, however, they are less potent. Most languages function with a relatively small inventory of vowels (typically 5 to 15). The probability of encountering any given vowel is therefore quite high, meaning its "surprisal" value, or information content, is low. The primary role of the vowel is to be the loud, stable, and audible engine of the syllable.

Consonants are the low-energy, high-information modulators. They are typically transient, noisy, and contain far less acoustic energy than vowels. They are the fragile part of the signal, the first to be lost in a noisy room or over a bad phone line. But informationally, they are powerhouses. Most languages have a larger inventory of consonants than vowels. More importantly, consonants are the primary differentiators of meaning in the lexicon. A single vowel nucleus, like -at, can be preceded by a host of consonants to create a dozen different words: cat, bat, sat, fat, mat, pat, rat, that, chat, gnat. Each consonant carries a high information load because it dramatically reduces the listener's uncertainty about which word is being spoken.

The syllable, typically structured around a vowel nucleus (V) with optional preceding (C) and following (C) consonants, is the optimal packet for transmitting this code. It ingeniously packages a fragile, high-information bit (the consonant) with a robust, high-energy carrier (the vowel). This C-V structure allows the speech stream to be segmented into discrete, digestible chunks. It imposes a digital-like rhythm onto an analog signal, allowing the brain to parse the flow of sound into meaningful units.

This alternation between two fundamentally different acoustic states—open-throated resonance and constricted turbulence—creates a signal that is both informationally dense and physically robust. A language of only vowels would be loud and musical but lexically impoverished, incapable of generating a large enough vocabulary. A language of only consonants would be a series of faint, indistinguishable clicks and hisses, informationally rich but physically incapable of reliable transmission. The marriage of the two is not just a happy accident; it is a near-perfect solution to the constraints imposed by physics and the demands of information transfer.

The skeptic might ask: Is this truly "optimal," or is it just the solution that our peculiar primate biology stumbled upon? Could an alien species with a different anatomy—say, one that communicates with modulated light or chemical signals—develop a system that is equally or more efficient? Absolutely. But given the constraints of producing sound with a biological apparatus in a terrestrial atmosphere, the vowel-consonant system is a remarkably effective local optimum.

Part IV: Reconstructing Echoes

The ultimate test of our theory lies in the past. If this architecture is so fundamental, we should be able to see its evolutionary precursors in the fossil record. Here, however, we must tread with extreme caution. We have no fossils of words. Any attempt to reconstruct the speech of our extinct relatives is an exercise in disciplined inference, bordering on speculation. A true skeptic must be brutally honest about the limits of our knowledge.

What can the bones tell us? The high larynx of Australopithecines, inferred from the shape of their skulls, would have severely restricted their vowel space. They could make sounds, but they could not speak with the phonetic richness of modern humans. The story becomes more compelling with later hominins. The discovery of a modern-looking hyoid bone (a crucial anchor for tongue muscles) from a Neanderthal skeleton at Kebara Cave, Israel, is tantalizing. It proves that Neanderthals had the anatomical potential for complex articulation. Similarly, analyses of their middle ear bones suggest their hearing was tuned to the same frequency range as our own. Reconstructions of their thoracic vertebrae suggest they had the fine breath control necessary for speech.

But potential is not performance. Several research groups have used detailed anatomical reconstructions to model the Neanderthal vocal tract and simulate the sounds it could produce. While these studies suggest a phonetic range slightly different from our own but certainly capable of speech, we must acknowledge the long chain of assumptions involved. Soft tissue does not fossilize. The precise shape of the pharynx, the size of the tongue, the thickness of the lips—all of these are educated guesses. These reconstructions are fascinating hypotheses, not established facts.

Perhaps the most powerful evidence is not anatomical but behavioral. The archaeological record shows a gradual accumulation of cognitive complexity. The remarkably standardized Acheulean handaxe, which remained a core technology for over a million years, could not have been transmitted across generations and vast distances without a sophisticated system of teaching, likely a form of proto-language. The appearance of symbolic behavior hundreds of thousands of years ago—the use of pigments like ochre, the deliberate burial of the dead, the creation of personal ornaments—strongly implies a mind capable of abstract thought and shared beliefs. Such a shared symbolic world is inconceivable without a communication system to build and sustain it.

This was not a single event, but a slow, iterative process. A feedback loop was likely established: a small improvement in vocal ability enabled better social coordination (e.g., in hunting or toolmaking), which conferred a survival advantage, which in turn placed further selective pressure on the evolution of even better vocal and cognitive abilities. Language did not spring forth fully formed; it was bootstrapped over a million years of hominin evolution.

Conclusion: The Necessary Duality

We return to our initial question. Is the universal architecture of language an accident or an inevitability? The evidence, when viewed through the dispassionate lens of science, points strongly toward the latter. The vowel-consonant structure is not a mere cultural convention. It is a deeply-rooted solution to an engineering problem, dictated by the immutable laws of physics and sculpted by the contingent path of our biological evolution.

It is the product of a grand feedback loop. The descent of the larynx, perhaps for reasons unrelated to speech, created an instrument of unprecedented acoustic potential. The brain evolved the neural circuitry to master this instrument. The relentless pressure to transmit more information more reliably in a noisy world favored a code that balanced energy and information. The result was the syllable, a perfect marriage of a robust vowel carrier and a precise consonantal modulator.

As skeptics, we must remain humble. The ultimate origin of the first word is a secret that the past will likely never yield. Our story, however compelling, is built on the echoes of sounds we can never hear and the shadows of soft tissues we can never see. But the consistency of the evidence—from the mathematics of a sound wave to the shape of a fossilized bone—provides a powerful and coherent framework. It reveals that the simple, everyday act of alternating between an open-throated vowel and a constricted consonantal hiss is the very foundation of our humanity. Upon this fundamental physical duality rests the entire magnificent edifice of human culture, science, and thought. The answer to why we speak this way is, in a very real sense, the answer to what we are.