Anatomy of a Cascading Failure: Deconstructing the October 20, 2025, AWS Outage

A deep technical analysis of the October 20, 2025, AWS outage, revealing how a failure in network load balancer health monitoring triggered a cascading failure through automation, and outlining essential lessons for building truly resilient cloud architectures.

Executive Summary

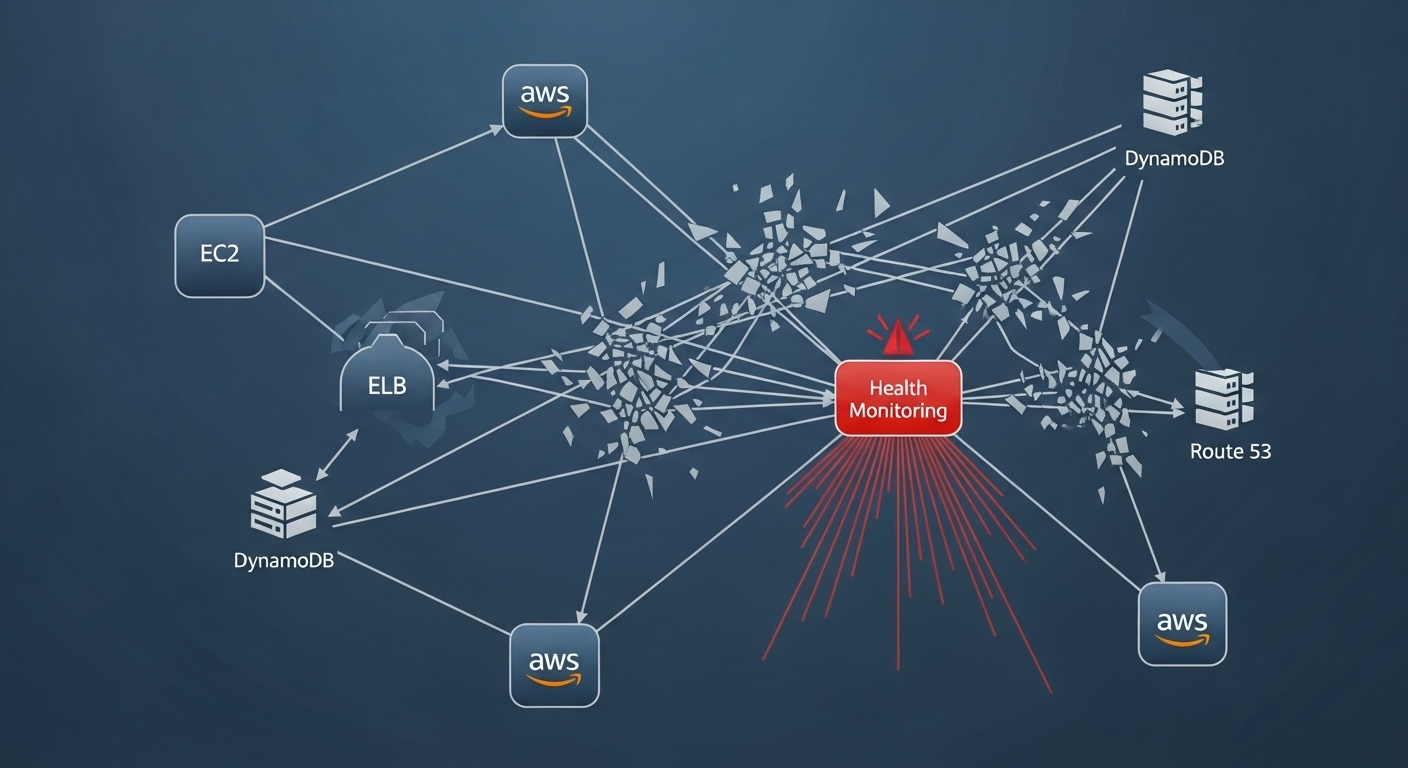

On October 20, 2025, Amazon Web Services (AWS) experienced a significant service disruption originating in its US-EAST-1 (Northern Virginia) region, which resulted in a widespread global outage. The event impacted thousands of digital services, ranging from social media and gaming platforms to critical financial and government infrastructure. Initial public-facing symptoms pointed towards Domain Name System (DNS) resolution failures and Application Programming Interface (API) errors, particularly affecting the Amazon DynamoDB service. However, subsequent investigation by AWS revealed a more deeply rooted cause: a malfunction within an "underlying internal subsystem responsible for monitoring the health of our network load balancers."

This report provides a comprehensive technical analysis of the outage, reconstructing the event timeline and dissecting the architectural components involved. The analysis demonstrates that the incident was a textbook example of a cascading failure within a large-scale distributed system. The failure originated not in the data plane responsible for routing traffic, but in a control plane-adjacent monitoring system. This initial fault triggered a catastrophic positive feedback loop, where automated resiliency mechanisms—specifically, the integration between Elastic Load Balancing (ELB) health checks and EC2 Auto Scaling—began to systematically dismantle healthy infrastructure in a misguided attempt to self-heal.

Key findings of this report indicate that the widespread "DNS issues" were a secondary effect of the load balancing system withdrawing healthy targets from service. The subsequent "health check storm," fueled by EC2 Auto Scaling's automated termination of instances incorrectly marked as unhealthy, placed an untenable load on the EC2 control plane. This necessitated AWS's critical mitigation strategy of throttling new EC2 instance launches to break the feedback loop and stabilize the region.

The event serves as a critical case study on the inherent risks of tightly coupled automation and correlated failures in modern cloud architectures. It underscores the limitations of traditional multi-Availability Zone (AZ) redundancy in the face of regional control plane failures and highlights the architectural imperative for true multi-region resilience. This report concludes with actionable recommendations for architects and engineers, focusing on decoupling health checks, implementing advanced multi-region strategies, and designing systems that can gracefully degrade rather than fail catastrophically.

Section 1: The Digital Blackout: Timeline and Global Impact

The service disruption of October 20, 2025, was not an instantaneous event but a rapidly escalating crisis that began with subtle indicators before precipitating a global digital blackout. This section reconstructs the incident's progression, documents the full scope of its impact on the global digital ecosystem, and analyzes the evolution of AWS's public communications as their understanding of the root cause deepened.

1.1. Initial Tremors: The Onset of Latency and Error Rates

The first public indication of a problem emerged at approximately 12:11 AM PDT (3:11 AM ET).¹ The AWS Health Dashboard, the official channel for service status communication, posted an alert acknowledging "increased error rates and latencies for multiple AWS services in the US-EAST-1 Region".³ This initial message, common in the early stages of a large-scale incident, reflected the ambiguity of the situation, where symptoms manifest across numerous, seemingly disparate services, making immediate root cause identification exceedingly difficult.⁵

Within an hour and a half, by 1:26 AM PDT, AWS engineers had narrowed the focus to a critical database service, Amazon DynamoDB. The dashboard was updated to confirm "significant error rates for requests made to the DynamoDB endpoint in the US-EAST-1 Region".² This early and prominent mention of DynamoDB shaped the initial public narrative, leading many customers and media outlets to believe the outage was primarily a database issue.² This focus, while technically accurate from a client-symptom perspective, masked the more fundamental networking-related failure that was already propagating through the system's foundational layers.

1.2. The Global Domino Effect: A Cross-Section of Affected Services

The failure within the US-EAST-1 region, AWS's oldest and one of its most critical infrastructure hubs, quickly triggered a global domino effect.⁸ The concentration of core service control planes and inter-service dependencies in this region meant that a localized fault had an outsized blast radius, crippling services worldwide.

- Consumer and Social Platforms: A vast number of consumer-facing applications became partially or completely inaccessible. Users of Snapchat, Roblox, Signal, Reddit, Duolingo, and the popular game Wordle reported widespread failures, from login issues to complete service unavailability.²

- Gaming and Entertainment: The entertainment sector was heavily impacted, with major gaming platforms including Fortnite, Pokémon Go, the Epic Games Store, and the PlayStation Network experiencing significant disruptions. Amazon's own services were not immune, as Amazon Prime Video and the Amazon.com retail site also suffered from the outage.³

- Financial and Business Services: The incident exposed the deep reliance of the financial sector on cloud infrastructure. In the UK, established institutions like Lloyds Bank and its subsidiaries Halifax and Bank of Scotland reported issues.⁸ Simultaneously, modern financial technology (FinTech) platforms, including the cryptocurrency exchange Coinbase, the trading app Robinhood, and the payment service Venmo, were rendered inoperable, preventing users from accessing funds or executing trades.¹

- Government and Enterprise: The outage's reach extended into public services, with the UK's HM Revenue and Customs (HMRC) website becoming inaccessible, highlighting the integration of government functions with commercial cloud providers.⁸

The scale of the disruption was immense. The internet outage monitoring service Downdetector recorded a peak of over 8 million user reports of problems globally. This included nearly 1.9 million reports from the United States and over a million from the United Kingdom, with hundreds of thousands more from countries like Germany, the Netherlands, and Australia, demonstrating the event's truly international impact.¹³

1.3. The Fog of an Outage: Tracing AWS's Evolving Public Diagnosis

The sequence of official statements from AWS throughout the incident provides a clear window into the process of a complex root cause analysis under extreme pressure. The narrative evolved from describing broad, user-facing symptoms to pinpointing a specific, underlying subsystem failure.

- Phase 1: Symptom-Based Reporting (The DNS Issue): The initial public-facing diagnosis, emerging around 2:01 AM PDT, centered on the most direct symptom experienced by client applications: a "DNS resolution of the DynamoDB API endpoint".¹ This was a technically correct description of the failure from an external perspective—applications were indeed failing to resolve or connect to service endpoints. However, this was not the origin of the fault but rather its most visible consequence. By 3:35 AM PDT, AWS announced that the "underlying DNS issue has been fully mitigated," a statement that, while likely true for the immediate symptom, proved premature as widespread service impairments continued.¹

- Phase 2: Narrowing the Locus (EC2 Internal Network): As the investigation deepened and initial fixes failed to restore full service, AWS's communications shifted. At 8:04 AM PDT, an update stated that the issue "originated from within the EC2 internal network".¹³ This marked a significant pivot, moving the investigation's focus away from a public-facing service like DNS and toward a foundational infrastructure layer internal to the EC2 service. This indicated that the problem was not with the DNS records themselves but with the reachability of the resources they pointed to.

- Phase 3: Pinpointing the Root Cause (NLB Health Monitoring): The definitive diagnosis was finally communicated at 8:43 AM PDT, nearly nine hours after the incident began. AWS stated, "The root cause is an underlying internal subsystem responsible for monitoring the health of our network load balancers".¹³ This statement identified a failure not in a data plane component that routes traffic, but in a control plane-adjacent system responsible for observing and reporting on the health of that data plane. This crucial distinction explains why the outage was so perplexing and widespread, as the system's own automated safety mechanisms were being fed faulty information.

The following table provides a consolidated timeline of key events and communications during the outage, compiled from official AWS statements and reputable media reports.

| Time (PDT) | Time (EDT) | Time (UTC) | Event Description / Official Statement | Key Services Mentioned | Source(s) |

|---|---|---|---|---|---|

| Oct 20, 12:11 AM | Oct 20, 3:11 AM | Oct 20, 07:11 | AWS first reports "investigating increased error rates and latencies for multiple AWS services in the US-EAST-1 Region." | Multiple Services | 2 |

| Oct 20, 1:26 AM | Oct 20, 4:26 AM | Oct 20, 08:26 | AWS confirms "significant error rates for requests made to the DynamoDB endpoint in the US-EAST-1 Region." | DynamoDB | 2 |

| Oct 20, 2:01 AM | Oct 20, 5:01 AM | Oct 20, 09:01 | AWS identifies a potential root cause related to "DNS resolution of the DynamoDB API endpoint." | DynamoDB, IAM | 2 |

| Oct 20, 2:22 AM | Oct 20, 5:22 AM | Oct 20, 09:22 | AWS reports applying initial mitigations and observing "early signs of recovery for some impacted AWS Services." | Multiple Services | 19 |

| Oct 20, 3:35 AM | Oct 20, 6:35 AM | Oct 20, 10:35 | AWS states "The underlying DNS issue has been fully mitigated, and most AWS Service operations are succeeding normally now." | Multiple Services | 1 |

| Oct 20, 7:14 AM | Oct 20, 10:14 AM | Oct 20, 14:14 | After a period of perceived recovery, AWS confirms "significant API errors and connectivity issues across multiple services in the US-EAST-1 Region." | Multiple Services | 21 |

| Oct 20, 8:04 AM | Oct 20, 11:04 AM | Oct 20, 15:04 | AWS updates that the issue "originated from within the EC2 internal network." | DynamoDB, SQS, Amazon Connect | 13 |

| Oct 20, 8:43 AM | Oct 20, 11:43 AM | Oct 20, 15:43 | AWS narrows down the source, stating: "The root cause is an underlying internal subsystem responsible for monitoring the health of our network load balancers." | AWS Services | 13 |

| Oct 20, 8:43 AM | Oct 20, 11:43 AM | Oct 20, 15:43 | As a mitigation, AWS announces: "We are throttling requests for new EC2 instance launches to aid recovery." | EC2 | 13 |

The progression of these statements reveals a classic diagnostic funnel. The initial alerts focused on the broad, external symptoms (API errors, DNS failures) that were most visible to customers. As engineers traced the causal chain backward, they moved deeper into the internal infrastructure, from the application layer (DynamoDB) to the networking layer (EC2 internal network) and finally to the meta-layer of monitoring and control (the NLB health subsystem). This journey from symptom to cause is fundamental to understanding the complex, interdependent nature of the AWS platform.

Section 2: The Epicenter: Deconstructing the Network Load Balancer and its Health Monitoring Subsystem

To fully comprehend the root cause of the October 20 outage, it is essential to understand the architecture and function of the components at the heart of the failure. The AWS Network Load Balancer (NLB) is not a monolithic entity but a highly distributed, sophisticated service built upon foundational AWS networking technologies. The failure occurred within a subsystem responsible for monitoring this complex machinery.

2.1. The Unseen Backbone: AWS Hyperplane

The Network Load Balancer, along with other foundational services like NAT Gateway, AWS PrivateLink, and Gateway Load Balancer, is powered by an internal network virtualization platform known as AWS Hyperplane.²⁴ Hyperplane is a horizontally scalable, multi-tenant state management system designed to process terabits per second of network traffic across the AWS global infrastructure.²⁵

Hyperplane's architecture is a prime example of a statically stable design, separating its control plane from its data plane.²⁴

- The Data Plane: This is the high-performance packet-forwarding component. It consists of a massive fleet of devices that process customer traffic based on a set of routing and configuration rules.²⁴

- The Control Plane: This component is responsible for managing customer configurations. It periodically scans its data stores (such as Amazon DynamoDB tables) for customer configurations, compiles this information into files, and writes them to Amazon S3.²⁴

The data plane servers then periodically and independently poll these S3 files to update their local configuration caches. This decoupled architecture is highly resilient; an outage in the control plane does not immediately impact the data plane, which can continue to operate using its last known good configuration.²⁴ The October 20 outage did not appear to be a failure of the Hyperplane data plane itself—traffic could physically flow—but rather a failure in an adjacent system responsible for providing health state information to the control plane.

2.2. The Traffic Conductor: Architecture of the Network Load Balancer (NLB)

The Network Load Balancer operates at the fourth layer of the OSI model (the transport layer), managing TCP and UDP traffic flows.²⁸ It is designed for extreme performance, capable of handling millions of requests per second with ultra-low latency, making it ideal for high-throughput workloads.²⁸

The logical components of an NLB are as follows:

- Listeners: A listener checks for connection requests from clients on a specific protocol and port. When a valid request is received, the listener forwards it to a target group.³¹

- Target Groups: A target group is a logical grouping of resources, known as targets, that receive traffic from the load balancer. The target group defines the protocol and port for routing traffic to the registered targets and contains the configuration for health checks.³²

- Targets: These are the ultimate destinations for traffic, such as EC2 instances, containers, or IP addresses, registered within a target group.³³

This architecture also reflects a control plane and data plane separation. The NLB data plane is the Hyperplane-powered infrastructure that performs the actual packet forwarding from clients to targets. The NLB control plane consists of the APIs and underlying systems that manage the configuration of listeners, the registration and deregistration of targets, and—most critically for this incident—the execution and interpretation of health checks. The failure on October 20 was a breakdown within this control plane functionality.

2.3. The Sentinel System: A Technical Deep Dive into NLB Health Checks

The subsystem identified by AWS as the root cause is responsible for monitoring the health of NLB targets. This is a critical function that enables the load balancer to route traffic away from failing or overloaded instances, thereby ensuring high availability.³²

NLB health monitoring employs a combination of two methods:

- Active Health Checks: The load balancer periodically sends a health check request to each registered target on a configurable interval (e.g., every 30 seconds).³⁶ These checks can use various protocols (TCP, HTTP, HTTPS) and are considered successful or unsuccessful based on configurable parameters like response timeouts and expected success codes.³⁶ If a target fails a consecutive number of checks (the "unhealthy threshold"), the load balancer marks it as unhealthy and stops routing new traffic to it.³⁷

- Passive Health Checks: In addition to active probing, NLB also observes how targets respond to actual client traffic. This allows it to detect an unhealthy target—for instance, one that is repeatedly resetting connections—even before the next active health check occurs. This feature cannot be configured or disabled by users.⁴⁰

A crucial behavior of Elastic Load Balancing is the concept of "fail-open." If all registered targets within a target group are simultaneously marked as unhealthy, the load balancer will override its normal logic and route traffic to all of them.³⁶ This is a last-resort safety mechanism designed to prevent a faulty health check configuration or a widespread, transient issue from taking an entire service offline. It assumes that it is better to send traffic to potentially unhealthy targets than to send it nowhere at all.

The failure of the monitoring subsystem meant that this entire sentinel system became unreliable. Instead of accurately reflecting the state of the backend fleet, it began providing false signals. This faulty intelligence, fed into the highly automated AWS ecosystem, was the trigger for the cascading failure that followed. The problem was not with the network's ability to forward packets, but with the control system's ability to know where to send them.

Section 3: The Cascade: A Root Cause Analysis of a System-Wide Failure

This section synthesizes the event timeline and technical architecture to construct a detailed, evidence-based analysis of how the failure of a single monitoring subsystem propagated into a region-wide, multi-service outage. The incident was not a single event but a chain reaction, where automated resiliency features, operating on faulty data, amplified the initial problem into a catastrophic feedback loop.

3.1. The Failure Hypothesis: When the Watcher Fails

The central hypothesis, based on AWS's final root cause statement, is that the internal subsystem responsible for monitoring NLB target health experienced a critical malfunction. This malfunction could have taken several forms: a software bug causing it to misinterpret valid health responses, a failure in its own data collection mechanism, or a resource exhaustion issue that prevented it from processing health check results correctly.

Regardless of the specific internal mechanism, the outcome was the same: the system began to erroneously report a massive number of healthy, functioning targets across the US-EAST-1 region as "unhealthy." This created a large-scale correlated failure, a scenario where numerous components that are designed to be independent fail simultaneously due to a single, shared underlying cause.⁴² In this case, the shared cause was the reliance of thousands of distinct services on this single, now-faulty, monitoring subsystem for health state information.

3.2. From Health Checks to DNS Errors: Unraveling Inter-Service Dependencies

The immediate effect of this mass misclassification of healthy targets was the systematic removal of service capacity. The automated systems designed to ensure reliability began to actively dismantle the very infrastructure they were meant to protect.

- Effect 1: NLB Removes "Unhealthy" Targets and Triggers DNS Failover: The primary responsibility of a load balancer is to route traffic exclusively to healthy targets.³² As the monitoring system broadcasted false "unhealthy" signals, NLBs across the region would have immediately stopped sending new connections to a vast number of their backend servers. This alone would cause significant API errors and latency. Furthermore, the Network Load Balancer is deeply integrated with Amazon Route 53 for DNS-level failover. When an NLB's nodes in a particular Availability Zone determine that they have no healthy targets to route to, Route 53 is designed to automatically remove the DNS records corresponding to the load balancer's IP addresses in that zone.²⁸ When this happened at a massive scale across the region, it manifested to the outside world as the widely reported "DNS issue." Client applications attempting to resolve service hostnames (like the DynamoDB API endpoint) would have received no answer, resulting in widespread connection failures.¹ The DNS failures were not the root cause but a direct, automated consequence of the underlying health check failure.

- Effect 2: Impact on Foundational AWS Services: Many foundational AWS services, such as Amazon DynamoDB, Amazon SQS, and even the EC2 control plane itself, are built as highly available, distributed systems that utilize internal load balancing for traffic management and reliability. When the health monitoring for these internal load balancers failed, their own API endpoints became unreachable or unstable. This directly explains why services like DynamoDB and SQS were among the first and most prominently affected, leading to the "significant API errors" that AWS reported.¹³ The failure cascaded upward from the hidden internal networking layer to the public-facing service APIs that countless applications depend on.

3.3. The "Health Check Storm": How Auto Scaling Amplified the Failure

While the removal of targets from load balancers was a significant problem, the most destructive phase of the outage was triggered by the interaction between the faulty health checks and EC2 Auto Scaling. This interaction created a powerful and destructive positive feedback loop.

EC2 Auto Scaling groups can be configured to use ELB health checks as a signal for instance health. If an instance fails its ELB health check, the Auto Scaling group's automation will deem the instance unhealthy, terminate it, and launch a new replacement instance in its place.⁴⁰ This is a powerful feature for maintaining fleet health against isolated instance failures.

However, in the context of a correlated monitoring failure, this feature became a vector for mass destruction. The sequence of events for thousands of Auto Scaling groups across the region would have been as follows:

- The faulty monitoring subsystem incorrectly marks a healthy, in-service EC2 instance as "unhealthy."

- The ELB service relays this status to the associated Auto Scaling group.

- The Auto Scaling group, acting on this information, initiates a termination action against the healthy instance and requests a new EC2 instance from the EC2 control plane to maintain its desired capacity.

- The new instance launches, bootstraps its application, and is registered with the load balancer.

- However, the monitoring subsystem is still malfunctioning. It immediately marks the new, perfectly healthy replacement instance as "unhealthy."

- The cycle repeats, with the Auto Scaling group terminating the new instance and launching another.

This created a massive, self-inflicted "termination storm" or "health check storm" across the entire US-EAST-1 region. Thousands of automated systems began simultaneously and continuously terminating healthy infrastructure and requesting new capacity, creating a vicious cycle.⁴⁷ This churn placed an unprecedented and unsustainable load on the control planes of multiple AWS services, particularly the EC2 control plane responsible for launching and terminating instances.

3.4. The Control Plane Under Siege: Why Throttling EC2 Launches Was the Key to Recovery

The final piece of the puzzle is understanding AWS's primary mitigation action, announced at 8:43 AM PDT: "We are throttling requests for new EC2 instance launches to aid recovery".¹³ This action was not aimed at fixing the network connectivity or the monitoring bug itself. Its purpose was to break the catastrophic feedback loop described above.

The health check storm had effectively launched a massive, internal Distributed Denial of Service (DDoS) attack against the EC2 control plane. This control plane was being flooded with an enormous volume of launch and terminate requests generated by its own automation. This overload likely prevented legitimate recovery actions, hampered engineers' ability to deploy fixes, and may have even been the source of the "new issue" with "significant API errors" that cropped up later in the morning around 7:14 AM PDT.¹⁸

By rate-limiting or "throttling" new instance launches, AWS engineers manually intervened to stop the churn. This prevented Auto Scaling groups from immediately replacing the instances they were terminating, effectively breaking the positive feedback loop. This action provided the necessary "breathing room" for the overwhelmed control planes to stabilize. With the system no longer actively destroying itself, engineers could focus on isolating and remediating the root cause within the NLB health monitoring subsystem. The throttling was a crucial, albeit blunt, instrument to halt the cascading failure and create the stable conditions necessary for a methodical recovery. It demonstrates that in hyper-automated, large-scale systems, sometimes the most effective recovery tool is the ability to temporarily disable the automation itself.

Section 4: Architecting for Resilience in a Concentrated Cloud

The October 20 outage serves as a powerful case study, moving beyond theoretical discussions of cloud resilience to a concrete demonstration of how modern, hyper-scale systems can fail. The incident revealed the limitations of common architectural patterns and provided critical lessons for engineers and architects responsible for building reliable services on AWS. This section distills those lessons into actionable, prescriptive guidance.

4.1. The US-EAST-1 Paradox: The Limits of Traditional Multi-AZ Redundancy

For years, the foundational principle of high availability on AWS has been the multi-Availability Zone (AZ) architecture. By distributing resources across multiple, physically isolated data centers within a single region, applications can withstand the failure of an entire AZ. However, this outage starkly illustrated the limits of this model.

The failure was not a physical one, like a power outage or network partition affecting a single AZ. It was a logical failure within a regional control plane service—the NLB health monitoring subsystem. Because this service is regional in scope, its malfunction impacted all AZs within US-EAST-1 simultaneously.¹⁰ The faulty health signals were broadcast across the entire region, causing automated systems in every AZ to take incorrect actions in unison. This synchronized, region-wide failure rendered the multi-AZ redundancy strategy ineffective for this specific class of event.

Furthermore, the incident highlighted the "US-EAST-1 paradox": its status as AWS's oldest and most foundational region means that many global services, such as AWS Identity and Access Management (IAM), have their primary control planes homed there.² Consequently, a severe control plane failure in US-EAST-1 can have global repercussions, affecting authentication and management operations in other, otherwise healthy regions. This demonstrates that for the highest tiers of availability, thinking beyond a single region is no longer optional, but essential.

4.2. Advanced Resilience Patterns

To build systems capable of withstanding regional control plane failures, architects must adopt more sophisticated resilience patterns that go beyond simple multi-AZ deployments.

4.2.1. Multi-Region Resiliency Models

The most direct way to mitigate a regional failure is to have the ability to operate out of a different, unaffected region. Two primary models exist:

- Multi-Region Active-Passive (Failover): In this model, a primary region serves all traffic, while a secondary, "standby" region is kept in a state of readiness. Infrastructure is provisioned in the standby region, and data is asynchronously replicated from the primary. In the event of a primary region failure, DNS routing (using services like Amazon Route 53 with health checks) is updated to direct all traffic to the standby region. This model is simpler to manage but involves a recovery time objective (RTO) that includes the time to detect the failure and execute the failover.

- Multi-Region Active-Active: This more complex model involves serving traffic from multiple regions simultaneously. Using DNS policies like latency-based or geolocation routing, users are directed to the nearest healthy region. This architecture offers the lowest RTO, as traffic can be shifted away from a failing region almost instantaneously. However, it introduces significant complexity around data synchronization, as data must be kept consistent across all active regions, often requiring technologies like DynamoDB Global Tables or custom replication solutions.⁵⁰

4.2.2. Decoupling Health Checks from Automated Termination

A critical lesson from the "health check storm" is the danger of a monolithic health check that triggers a destructive action like instance termination. A more resilient approach involves decoupling different types of health checks and associating them with appropriate, less destructive actions.

- Liveness Probes: These are simple checks to verify that an instance and its core application process are running (e.g., a basic TCP check or an EC2 instance status check). A failure of a liveness probe is a strong signal that the instance is fundamentally broken and should be terminated and replaced by the Auto Scaling group.

- Readiness Probes: These are more comprehensive checks that verify if an application is fully initialized and ready to accept traffic (e.g., an HTTP endpoint that returns 200 OK only after all dependencies are connected). A readiness probe failure should signal the load balancer to remove the instance from the traffic rotation. However, it should not cause the Auto Scaling group to terminate the instance. This allows the instance time to recover from a transient issue without being destroyed.

- Dependency Health Checks (Deep Health Checks): These checks validate the health of downstream dependencies, such as databases or external APIs. As AWS best practices strongly advise, and as this outage demonstrated, the results of deep health checks should never be tied to Auto Scaling termination actions.⁴⁵ A failure in a dependency could cause the entire fleet to fail these checks simultaneously. Tying this to termination would trigger the exact kind of mass termination event seen during the outage. Instead, a deep health check failure should only be used by the load balancer to temporarily route traffic away from the affected instances.

4.2.3. Implementing Graceful Degradation and Intelligent Failover

Instead of designing systems that either work perfectly or fail completely, resilient architectures aim for graceful degradation. When a dependency fails, the system should continue to operate in a limited but useful capacity.

- Circuit Breakers: This pattern involves wrapping calls to external dependencies in a proxy object. If calls to a dependency start failing, the circuit breaker "trips" and immediately fails subsequent calls without even attempting to contact the failing service. This prevents the application from wasting resources on calls that are destined to fail and protects the downstream service from being overwhelmed by retries.⁵²

- Rate Limiting and Load Shedding: During a partial failure, the remaining healthy capacity of a system can be quickly overwhelmed. Implementing rate limiting at the API gateway or load balancer layer can protect the system by rejecting excess traffic. Load shedding is a more sophisticated technique where the system starts intentionally dropping lower-priority requests to ensure that high-priority requests can still be served.⁵²

4.2.4. Proactive Failure Detection with Chaos Engineering

The most resilient systems are those that are regularly tested against failure. Chaos engineering is the practice of proactively and intentionally injecting failures into a production environment to identify hidden weaknesses and cascading failure modes. For example, an engineering team could run an experiment that simulates the ELB health check system reporting a false negative for a percentage of the fleet. This would allow them to test whether their automation responds correctly (by removing instances from traffic but not terminating them) and identify any potential for a "health check storm" in a controlled manner, before a real outage occurs.

The following table provides a comparative analysis of these resilience strategies, offering a framework for architects to evaluate the tradeoffs between cost, complexity, and the level of protection they provide.

| Strategy | Protection Against | Cost Complexity | Operational Complexity | Key AWS Services |

|---|---|---|---|---|

| Single-Region Multi-AZ | Single AZ failure (e.g., power, network partition) | Low | Low | Elastic Load Balancing, EC2 Auto Scaling, Multi-AZ RDS |

| Multi-Region Active-Passive | Regional service outage, regional control plane failure | Medium | Medium | Route 53 Failover, S3 Cross-Region Replication, DynamoDB Global Tables (for data) |

| Multi-Region Active-Active | Regional service outage, regional control plane failure | High | High | Route 53 (Latency/Geolocation), AWS Global Accelerator, DynamoDB Global Tables |

| Decoupled Health Checks | Cascading failures from dependency issues, "health check storms" | Low | Medium | ELB Health Checks, Custom CloudWatch Metrics, Lambda |

| Graceful Degradation | Overload from dependency failures, cascading request failures | Low-Medium | Medium | AWS API Gateway (throttling), Application-level logic (Circuit Breakers) |

By carefully selecting and combining these strategies, organizations can build architectures that are not only resilient to common failures but are also prepared for the more insidious and complex failures of the control planes that manage modern cloud infrastructure.

Conclusion: Beyond the Five Nines

The Amazon Web Services outage of October 20, 2025, was a profound and humbling lesson in the intricate nature of modern, hyper-scale distributed systems. It was not a failure of hardware, nor a simple software bug in a single application. It was a systemic failure of a control plane, where the very automation designed to ensure resilience became the primary vector for a region-wide collapse. The incident demonstrated that in a tightly coupled, highly automated environment, a logical error in a centralized monitoring system can be far more destructive than the physical failure of an entire data center.

The analysis reveals a clear causal chain: a faulty health monitoring subsystem broadcast incorrect state information, which triggered a series of automated but inappropriate responses. Resiliency features like DNS failover and Auto Scaling health check replacements, designed to handle isolated failures, instead acted as amplifiers, propagating the initial error in a catastrophic positive feedback loop that overwhelmed the regional control plane. The critical mitigation—throttling EC2 instance launches—underscores a vital principle of complex systems management: sometimes, the most important recovery action is to temporarily disable the automation.

This event forces a re-evaluation of what it means to build resilient applications in the cloud. It proves that a multi-AZ architecture, while essential, is insufficient to protect against regional control plane failures. For truly critical workloads, a multi-region strategy is no longer a luxury but a necessity. Furthermore, the incident highlights the urgent need for more nuanced health checking strategies that decouple the detection of a problem from the destructive action of termination.

Ultimately, the outage serves as a stark reminder that as our systems become more complex and more automated, their failure modes also become more complex and counterintuitive. Achieving high availability—moving beyond the theoretical "five nines"—is not merely about adding redundancy. It requires a deep and continuous effort to understand the hidden dependencies between services, to test for correlated and cascading failure modes, and to build systems that can fail gracefully, recognizing that even the most sophisticated automation can, under the wrong circumstances, become the architect of its own demise.